When we started Bimbeats, the idea was that we would build software capable of automating the process of extracting data from our models. Yes, Revit was the low hanging fruit. I used to work as a designer in Revit, then as a BIM Specialist in Revit and finally as a software developer…yes, you guessed it, mainly building software around Revit APIs. It was a no brainer to use that expertise to start with something that we knew well, and we could easily define certain issues with it. You see the problem isn’t knowing about the issue, the problem always was, doing something about it.

I always knew that the bigger the file we were working with in Revit, the slower it was. Slower to work with, slower to open it, slower to synch and save it. I worked on enough projects in my Designer/BIM Specialist life to know that. Everyone knows that. Heck, it’s such a common thing to “know” that one could add it to some kind of list of “natural laws of working with computers”. The bigger the files you are working with the longer it takes to do anything with them. You see, back in the days when I carried a stack of floppy disks with me everywhere, everyone was acutely aware of the size of every file they pulled down. Want that new wallpaper or an MP3? Shit, it’s 2.1MB so I will need to use something like WinRar to zip it and split it into two files less than 1.44MB which was the storage capacity of each floppy. Not just that, but you were likely to be pulling that thing down via dial-up at around 5.8kb/s. 2.1MB you say? That’s what? 2100 kb at 5.8 kb/s, so around 360s or 6m. Yes, I can do it! Whew! What I am trying to illustrate is that at some point storage became abundant, with USB sticks storing Terabytes of data, and 1GB/s internet connections. It stopped mattering what size things were. Unlimited storage these days is the norm, not the exception. That’s how we approach file sizes today. No one really, I mean “really” cares. That’s probably why no one really looked at how these things affect their bottom lines.

So we had Matt from Bimbeats embedded as a consultant at a few companies over the last couple of months. They are Bimbeats customers, so they pull data from their Revit files into a centralized data store, and then we were able to do some analytics on that data. You know, actually look at the data and draw some conclusions from recorded, quantifiable user behavior and the effects that it might have on the client’s bottom line.

The why

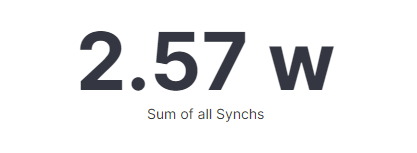

Ok, so let’s establish some basic metrics here to give you an idea of what we are talking about. Let’s say this company has about 100 concurrent users. They do roughly 600 synchs a day. That drops substantially during weekends, dragging down the overall average but Mon-Fri, it’s roughly around 600 synch events. Here’s what that synch frequency looks like in the last 3 months:

Simply put that company spent “2.57 weeks” in the last three months (90 days) just synching their models.

2.57 “whole weeks” is something like 431.76 hours so in terms of what we think of a workweek, being 40h, it’s more like 10.794 “work weeks” of time, or 11 people doing nothing for a week but synch all day long. I am not sure what these people are billable as, but that’s roughly $86,352 if they are billed at $200/h, and around $345,408 a year if we extrapolate the data for the whole year instead of just last three months. That’s one heck of a chunk of money for sitting around and doing nothing.

Sure, I am not saying that you can eliminate downtime expenses completely, but instead I am saying that you should probably put some effort into minimizing them.

Konrad

According to Autodesk it’s simple, just follow a few simple steps that they outlined here: Best Practices. One of the steps they are suggesting is to simply compact the model when you synch it.

Save the file with compact selected (if saving over the same file). If this is a workshared file, it’s recommended that you archive the original central file and its backup folder before saving in the same location with the same name.

Autodesk

Have you ever wondered why they recommend compacting? It’s probably because of how their Synch works in the first place. Here’s a link to a document that explain different save options in Revit, and one thing that caught my attention was the explanation around compacting.

Compact File. Reduces file sizes when saving workset-enabled files. During a normal save, Revit only writes new and changed elements to the existing files. This can cause files to become large, but it increases the speed of the save operation. The compacting process rewrites the entire file and removes obsolete parts to save space. Because it takes more time than a normal save, use the compact option when the workflow can be interrupted.

Autodesk

Mhmmmm….so you tell me that the normal save process leaves bunch of garbage around in the file, because it was “faster” even though over time it’s slowing the whole thing down. Mhmmmm…explain that to me again please. Anyways, that’s Autodesk 101 for you. Back to compacting.

The how

Sounds simple enough. I bet since everyone knows about this, and Autodesk recommends it everyone must be doing it. Right? Let’s have a look at the same company’s data for the last 3 months.

Yikes, 99.9% of all Synchs were not “compact Synchs”. Total of 43 times that someone checked the “Compact” checkbox out of almost 34,000 times that these models were synched. This one is rough. Let’s see how that breaks down even further into files.

OK, so we have 672 unique files and only 9 of them were ever compacted. That’s 1.34% of all files. I think you are getting the point here. Just to make things worse, in this particular example it also looks like majority of all Compact events were applied to a single file only. One file accounts for almost 50% of all Compact Synchs in the last 3 months. That’s not great, and also makes it impossible for me to make the case for the next argument. I wanted to look at the actual impact that Compacting has on reduction of file size. In order to do that reliably, I would need some data for comparison. Right? Some before, and some after. I would also prefer that it’s a substantial sample of data, which this is not. Just for posterity let’s continue with this example, and I might bring in some data from other clients to further quantify my point, at a later time.

Let’s have a look at this company’s results for “compact synch” and “normal synch” events and measure the average file size.

Like I said, just sheer number of compact events (43) is not enough data to make any determinations from it. However, I wanted to show this table to illustrate how you could quickly visualize that change in % points for every file on your servers. All you are really looking at is the file size change for synch events that were compacted versus file size for synch events that were not. Again, these files are not that big.

We usually advise customers to focus on the biggest offenders/biggest projects in the office first. These are bound to have the biggest impact. I am not just saying this. We looked at the data and it looks like the top 10 biggest projects would account for roughly 60% of all Synch/Open events.

If there is one thing that this illustrates is that there is a lack of file maintenance which should be addressed. Also, that if you don’t perform certain actions, you can’t really measure their effectiveness.

The good news is that we did have a client that we worked with, that had one big project, where the BIM Manager on that project compacted files over night, on a regular basis, and we were able to isolate that data for analysis. Sometimes, all it takes is one person at the company to set the example for everyone else. Here’s the results.

What you see above is a project that had multiple files ranging in size from ~500MB to less than 100MB. One thing that is important for these files was that they were compacted on a regular basis throughout the life of that project, so when we looked at the file size values for synch events when they were compacted and averaged them out the savings in file size were staggering. ~38% is a lot! I am not saying that every project will get this and as a matter of fact we did this for a few other “good” projects, and the results were not close to 38%, so I am not saying that every project will get savings of that magnitude, but some will, and rest will get some savings. Period. Whether that is 30% or 10% doesn’t really matter because this can be a form of a passive income. What I mean by that, is that you can set up a batch processing machine in your office, and have it Compact all models overnight. Hardware costs in this scenario are minimal, and it’s a one time task. Here’s another project that was compacted on a regular basis and it had ~11% reduction in average file size.

Here’s another one with file size change of around 9%.

The how much

OK, so now you know that you can reduce your file size by 10-30% or even more, just by compacting your files on a regular basis. Great. What does that do for users/companies in terms of time savings?

First let’s try to figure out what’s the relationship of File Size to Synch Duration and what kind of impact would reducing one have on another. One proxy of such relationship, albeit simplified, is to simply plot lots of different files and their Synch durations, and then calculate a Linear Regression/Trend line for them. We did that for around 400,000 Synch/Open events across 3 different companies and roughly 400 users. Here’s some plots and trends that they show.

The above is for files between 500MB-1GB. At 1GB we are at around 375 seconds with the Duration Time trending down to around 280s at around 750MB and around 185s at 500MB. Basically as the file gets 50% smaller, it’s expected to be around 50% faster to Synch/Open.

Our estimation here is that for every 1% reduction in file size, you can reduce your Synch/Open times by around 1%.

Again, this is not guaranteed, but rather a “informed guess”.

With the above in mind, if we assume that this 100 user company:

- Spent total of 431.76 hours Synching (in the last 3 months)

- Regular compacting of files would reduce file size approximately 10%.

We can assume potential savings of around $34,540 a year just on Synch waiting times (431.76h * 10% * 4 * $200/h). Guess what’s the kicker here…they also spent around 8 “whole weeks” on Open events. That’s another 1,344 hours in the last 3 months so if we extrapolate that for the whole year, it would be worth around $107,520 and would bring the total potential savings to around $142,060. Think about that for a second.

Can your company afford to be wasting that kind of cash?

Konrad

The solution

What would I propose to be the solution here? I can think of couple of different things.

- First and obvious one is to reach out to your BIM Managers, and reiterate that you want compacting to be back on the agenda and part of the model maintenance routine. That’s fine. We are task oriented people, I am sure most of us, can work that into their schedules.

- Another potential solution here, is to setup a batch processing machine with a free tool like Revit Batch Processor, and start compacting over night.

- There is also a third idea that I think would be worth considering. What about a tool, that would monitor every file in the office, check when was it last compacted, and if it’s been more than 2 weeks (Matt likes a different trigger here. Instead of measuring time/measure number of times a file was saved. If it was saved X-times, then trigger compact), next time someone goes to Synch they are asked to compact, or the tool itself checks that button for them in the background. The reason I like that idea, is because it gets the job done, does it automatically, there would be no setup of projects involved, no batch processing machines, and the added bonus is an educational piece. Users now would be “encouraged” (or forced) to compact every few weeks (or number of synchs), even though it would have been completely random when it comes to who would have been chosen for the task. Still, I like the idea of letting users know what’s going on. Finally, there would be no tear down needed when project was over. If no one is opening and synching the file anymore, this tool would not trigger anymore. Done.

The plug

Now is the time for a shameless plug. Two things:

- First, this analysis and many more are possible with a tool like Bimbeats. Go check it out at Bimbeats.com. If you like it, reach out to me, and I will be happy to schedule a demo with you. Here’s my calendar.

- Second, I am proposing a tool here, but unfortunately at the moment, it’s just an idea. Since I work as a consultant, and a software developer, if you think you would be interested in a tool like that, perhaps we can build it for you. Drop me a line at konrad [at] archi-lab [dot] net.

Disclaimers

Finally, some disclaimers. Revit is not going to behave the same for everyone. Obviously we did our best to try to make these assumptions based on pretty large data sets. We think these data sets were pretty consistent and reliable. However, things do slip between the cracks, so don’t treat this as some kind of exact science. Results may vary from company to company, from hardware to hardware, from internet connection to internet connection etc. You catch my drift right? Be gentle with us. :-)

Also, I know this is a single factor analysis. I will for sure be looking at some more things that affect file sizes in Revit in the future posts. We will be making some more recommendations.

Finally, I personally believe that hardware is a single biggest contributor to this issue. However, instead of buying everyone a new, much more powerful computer, we can start by exhausting all of the other options first. We all have a budget folks.

Thank you for publishing this! It’s great that you guys are doing data driven research on this!

One general thought I had: did you do any comparisons of syncing times directly before and after a compact? I realize you don’t have many models that were compacted, so it will be a small data pool but would still be interesting nonetheless.

The devils advocate argument here is: “what if there’s a correlation but not a causation between file size and sync performance?”. If that’s the case, file size could still be a rough indicator of model performance (as it could potentially trend in the same manner as the hypothetical

‘underlying cause’) while at the same time compacting the model would do little to boost model performance.

Pieter,

Oh, totally, I am not saying that Compacting is the silver bullet here. Checking duration of a synch before compacting and after is a little bit of a fool’s errand. As Autodesk explained in their documentation for the compacting option, in a normal synch they don’t purge obsolete data, so each synch is an additive process. It grows the file size over time. If we measured an impact of a compact process right after the compact only, then we would be looking at it on the “best” day only. That’s because each subsequent synch in theory adds to the file size, and potentially slows it down, until you compact again to remove some of the deadwood from it.

Cheers!

-K

Hi Konrad.

Are these tests conducted on central files located on the local network, on Revit servers on the Autodesk Cloud?

All of the above. It shouldn’t matter unless files move from local to cloud or vice versa and we all know that durations of synch/open vary across these environments. They do, so that would be a problem for sure. However, assuming that projects don’t move around like that, these durations should be consistent for the given model/location and still be able to illustrate whether compacting has any impact on them. Keep in mind that any file size reduction benefits for cloud environments would actually be even greater given that in a cloud environment you are not only worried about the model experience when it’s downloaded to your local drive, but also internet connection and autodesk server throughput which will always be negatively impacted by bigger files getting moved across the wires.